test is important for checking if the script has

successfully parsed the input stream when the end of stream is

reached. Usually this means checking for the "start token" or tokens of the

given grammar.

| check for a start token |

read;

# ... more code

(eof) {

pop;

"statement*" {

# successful parse

quit;

}

# unsuccessful pase

}

|

TAPE TEST (↑)

This test determines if the current tape cell is equal to the

contents of the workspace buffer.

| check if previous char the same as current |

read;

(==) {

put; add ".same.";

}

print; clear;

|

BEGINS WITH TEST (↑)

Determines if the workspace buffer begins with the given

text.

| only print words beginning with "wh" |

read; E" ",E"\n",(eof) { B"wh" { print; } clear; } |

ENDS WITH TEST (↑)

Tests if the workspace ends with the given text. The 'E'

(ends-with modifier) can only be used with quoted text but

not with class tests

| a syntax error, using E with a class |

r; E[abcd] { print; } clear; |

| correct using the E modifier with quoted text |

r; E"less" { print; } clear; |

| only print words ending with "ess" |

read; E" ",E"\n" { clip; E"ess" { add " "; print; } clear; } |

CONCATENATING TESTS (↑)

Conditional tests can be chained together with OR (,) or AND (.)

| test if workspace starts with "http:" OR "ftp:" |

B"http:" , B"ftp:" { print; } |

| print names of animals using OR logic concatenation |

read; [:space:] {d;} whilenot [:space:];

"lion","puma","bear","emu" { add "\n"; print; }

clear;

|

AND LOGIC CONCATENATION (↑)

It is also possible to do AND logic concatenation with the "." operator

| test if the workspace starts with 'a' AND ends with 'z' |

r; B"a".E"z" { print; clear; } |

| check if workspace is a url but not a ".txt" text file |

B"http://" . !E".txt" { add "<< non-text url"; print; clear; } |

| workspace begins with # and only contains digits and # |

B"#".[#0123456789] { } |

"AND" logic can also be achieved by nesting brace blocks. This

may have advantages.

| and logic with nested braces |

B"/" { E".txt" { ... } }

|

NEGATED TESTS (↑)

The "!" not operator is used for negating tests.

| examples of negated tests |

!B"abc", !E"xyz", ![a-z], !"abc" ... |

STRUCTURE OF A SCRIPT (↑)

LEXICAL PHASE (↑)

Like most systems which are designed to parse and compile context-free

languages parse scripts normally have 2 distinct phases: A "lexing" phase

and a parse/compile/translate phase. This is shown by the separation of the

unix tools "lex" and "yacc" where lex performs the lexical analysis (which

consists of the recognition and creation of lexical tokens), and yacc

performs parsing and compilation of tokens.

In the compile.pss program, as with many scripts that can be written

in the parse language, the lexical and compilation phases are

combined into the same script.

In the first phase, the program performs lexical analysis of the input

(which is an uncompiled script in the parse script language), and converts

certain patterns into "tokens" . In this system, a "token" is just a string

(text) terminated in an asterix (*) character. Asm.pp constructs these

tokens by using the "add" machine instruction, which appends text to the

workspace buffer.

Next the parse token is "pushed" onto the "stack" . I have quoted these

words because the stack buffer is implemented as a string (char *)

buffer and "pushing" and "popping" the stack really just involves

moving the workspace pointer back and forth between asterix characters.

I used this implementation because I thought that it would be

fast and simple to implement in c. It also means that we dont have to

worry about how much memory is allocated for the stack buffer or

each of its items. As long as there is enough memory allocated for

the workspace buffer (which is actually just the end of the stack buffer)

there will be enough room for the stack.

The lexical phase of asm.pp also involves preserving the "attribute"

of the parse token. For example if we have some text such as

"hannah" then our parse token may be "quoted.text*"

and the attribute is the actual text between the quotes 'hannah'.

The attribute is preserved on the machine tape data structure, which

is array of string cells (with no fixed size), in which data can

be inserted at any point by using the tape pointer.

PARSING PHASE (↑)

The parsing phase of the asm.pp compiler involves recognising and

shift-reducing the token sequences that are on the machine "stack" .

These tokens are just strings post-delimited with the '*' character.

Because the tokens are text, they popped onto the workspace buffer

and then manipulated using the workspace text commands.

COMMANDS (↑)

all commands have a single letter variants for the commands.

eg: p, pop, P, push, etc

COMMAND SUMMARY (↑)

All commands have an abbreviated (one letter) form as well. ++

increments the tape pointer by one (see 'increment' ) --:

decrements the tape pointer by one. (see "decrement;" ) mark "text"

adds a text tag to the current tape cell go "text"

sets the current tape cell to the marked cell add "text" / add 'text'

adds text to the end of the workspace check

jumps back to the @@@ label. This is used to ensure that

all shift reductions take place. clip

removes the last character from the workspace count

appends the counter to the end of the workspace quit

exits the script without reading anything more from

standard input. equivalant to the sed command 'q' clear

sets the workspace to a zero length string. Equivalent

to the sed command

put

puts the contents of the workspace into the current

item of the tape (as indicated by the @tape-pointer ) get

gets the current item of the tape and adds it to

the -end- of the workspace with -no- separator character swap

swaps the contents of the current tape cell and the

workspace buffer. a+

increments the accumulatorr variable/register in the virtual

machine by 1. a-

this command decrements a counter variable by one ll

appends the line number register to the workspace buffer. cc

appends the character number register to the workspace buffer print

prints the contents of the workspace buffer to the standard

output stream (stdout). Equivalent to the sed command 'p' pop

pops one token from the stack and adds it to the

-beginning- of the stack push :

pushes one token from the workspace onto the stack, or

reads upto the first star "*" character in the @workspace buffer

and pushes that section of the buffer onto the stack. unstack

pops the entire stack as a prefix onto the workspace buffer stack

pushes the entire workspace (regardless of any token delimiters

in the workspace) onto the stack. replace

replaces a string in the workspace with another string. read

reads one more character from the stdin. state

prints the current state of the virtual machine

to the standard output stream. maybe useful for debugging

I may remove this command. until 'text'

reads characters from stdin until the workspace

ends in the given text and does not end in the

second given text. This maybe used for capturing

quoted strings etc. while class/text;

reads characters from the input stream while the

@peep character -is- the character 'c' (or a character class) whilenot class/text;

reads characters from the input stream while the

'peep' register is not the character 'c' zero;

sets the counter to zero

The "add" command appends some text to the end of the 'workspace'

buffer. No other register or buffer within the virtual machine is

affected. The command is written:

| add a quote after the ':' character |

r; [\:] { add "\""; } print; clear; # non java fix!! |

r; [:] { add "\""; } print; clear; # java version |

But it shouldn't be necessary to escape ':'

The quoted argument may span more than one line. For example

begin { add '

A multiline

argument for "add".

'; } read; print; clear;

|

It is possible to use escaped characters in the quoted argument

| add a newline to the workspace after a full stop |

r; E"." { add "\n"; } print; clear; |

| Add a space after every character of the input |

read; add ' '; print; clear; |

r; a ' ';p;d; # the same. |

Add is often used to create new tokens.

| create and push (shift) a token using the "add" command |

"style*type*" {

clear;

add "command*";

push;

}

|

The script above does a shift-reduce operation while parsing some

hypothetical language. The "add" command is used to add a new token name to

the 'workspace' buffer which is then pushed onto the stack (using the 'push'

operation, naturally). In the above script the text added can be seen to be

a token name (as opposed to some other arbitrary piece of text) because it

ends in the "*" character. Actually any character could be used to delimit

the token names, put "*" is the default implementation.

The add command takes -one- and only one parameter, or argument.

REPARSE COMMAND (↑)

This command makes the interpreter jump to the parse> label. The .reparse

command takes no arguments, and is *not* terminated with a semi-colon. It

is written ".reparse" . The .reparse command is important for insuring that

all shift-reductions occur at a particular phase of a script.

If there is no 'parse>' label in the script, then it is an error to

use the ".reparse" command.

CLIP COMMAND (↑)

This command removes one character from the end of the 'workspace' buffer and

sends it into the void. It deletes it. The character is removed from the

-end- of the workspace, and so, it represents the last character which would

have been added to the workspace from a previous 'read' operation.

The command is useful for example, when parsing quoted text, used in

conjunction with the 'until' command. As in The following script only prints

text which is contained within double quote characters, from the input.

| parse and print quoted text |

read; '"' { clear; until '"'; clip; print; } |

"\"" { clear; until "\""; clip; print; } |

If the above script receives the text 'this "big" and "small" things'

as its input, then the output will be 'big small' .That is, only

that which is within double quotes will be printed.

The script above can be translated into plain english as follows

a- If the workspace is a " character then

- clear the workspace

- read the input stream until the workspace -ends- in "

- remove the last character from the workspace (the ")

- print the workspace to the console

- end if

-

The script should print the contents of the quoted text

without the quote characters because the 'clear' and

the clip commands got rid of them.

CLOP COMMAND (↑)

The "clop" command removes one character from the front

of the workspace buffer. The clop command is the counterpart of

the 'clip' command.

| print only quoted words without the quotes |

r; '"' { until '"'; clip; clop; print; } clear; |

COUNT COMMAND (↑)

The count command adds the value of the counter variable

to the end of the workspace buffer . For example, if the counter variable

is 12 and the workspace contains the text 'line:', then after the

count command the workspace will contain the text

| count the number of dots in the input |

read; "." { a+; } clear; <eof> { count; print; } |

The count command only affects the 'workspace' buffer in the virtual

machine.

QUIT COMMAND (↑)

This command immediately exits out of the script without

processing any more script commands or input stream characters.

This command is similar to the "sed" command 'q'

DECREMENT COMMAND (↑)

The decrement command reduces the tape pointer by

one and thereby moves the current 'tape' element one

to the 'left'

The decrement command is written "--" or "<"

If the current tape element is the first element of

the tape then the tape pointer is not changed and the

command has no effect.

** See also

'increment' , 'put' , 'get'

INCREMENT COMMAND (↑)

This command is simple but important. The command

adds -one- to the 'tape'-pointer. The command is written

Notice that the only part of the machine state which has

changed is that the 'tape-pointer' (the arrow in the 'tape'

structure) has been incremented by one cell.

This command allows the tape to be accessed as a 'tape' . This is

tortological but true. Being able to increment and 'decrement' the tape

pointer allows the script writer and the virtual machine to access any value

on the tape using the 'get' and 'put' commands.

It should be remembered that the 'pop' command also automatically

increments the tape pointer, in order to keep the tape pointer

and the stack in synchronization.

Since the get command is the only way to retrieve values from

the tape the ++; and --; commands are necessary. the tape

cannot be accessed using some kind of array index because

the get and 'put' commands to not have any arguments.

An example, the following script will print the string associated

with the "value" token.

pop;pop; "field*value*" { ++; get; --; print; } |

MARK COMMAND (↑)

The mark command adds a text "tag" to the current tapecell.

This allows the tapecell to be accessed later in the script with

the "go" command. The mark and go commands should allow "offside"

or indent parsing (such as for the python language)

| use mark and go to use the 1st tape cell as a buffer. |

begin { mark "topcell"; ++; }

read; [:space:] { d; }

whilenot [:space:]; put;

# create a list of urls in the 1st tapecell

B"http:" {

mark "here"; go "topcell"; add " "; get; put; go "here";

}

clear; add "word*"; push;

<eof> { go "topcell"; get; print; quit; }

|

See the script pars/eg/markdown.toc.pss for an example of using

the "mark" and "go" commands to create a table of contents for

a document from markdown-style underline headings.

GO COMMAND (↑)

The "go" command set the current tape cell pointer to a cell

which has previously been marked with a "mark" command (using

a text tag. The go/mark commands may be useful for offside

parsing as well as for assembling, for example, a table of contents

for a document, while parsing the document structure.

| basic usage |

read; mark "a"; ++; go "a"; put; clear; |

MINUS COUNTER COMMAND (↑)

The minus command decreases the counter variable by one.

This command takes no arguments and is written

The 'testeof' (eof) checks to see if the peep buffer contains the

end of input stream marker.

The 'while' command reads from the input stream while the

peep buffer is or is not some set of characters

For example

reads the input stream while the peep buffer is any one of the

characters 'abc'.

PLUS COUNTER COMMAND (↑)

This command increments the machine counter variable by

one. It is written

The plus command takes no arguments. Its counter part is the

'minus' command.

ZERO COMMAND (↑)

The "zero" command sets the internal counter to zero. This counter may be

used to keep track of nesting during parsing processes, or used by other

mundane purposes such as numbering lines or instances of a particular string

or pattern.

CHARS OR CC COMMAND (↑)

The "chars" or "cc" command appends the value of the current

character counter to the workspace register

| show an error message with a character number |

read;

[@#$%^&] {

put; clear;

add "illegal character ("; get; ") ";

add "at character number "; chars; add ".\n";

print; clear;

}

|

I originally named this command "cc" but prefer the longer

form "chars" (which is currently implemented as an alias in

the script compiler pars/compile.pss)

LINES OR LL COMMAND (↑)

The "lines" or "ll" command appends to the workspace the

current value of the line counter register. This is very useful

when writing compilers in order to produce an error message

with a line number when there is a syntax error in the input stream.

UNSTACK COMMAND (↑)

Pop the entire stack as a prefix onto the workspace. This may be

useful for displaying the state of the stack at the end of

parsing or when an error has occurred. Currently (Aug 2019) the

tape pointer is not affected by this command.

STACK COMMAND (↑)

Push the entire workspace onto the stack regardless of token

delimiters.

PUSH COMMAND (↑)

The "push" command pushes one token from (the beginning of) the

workspace onto the stack.

POP COMMAND (↑)

The pop command pops the last item from the virtual machine stack

and places its contents, without modification, at the -beginning-

of the workspace buffer, and decrements the tape pointer. If the

stack is empty, then the pop; command does nothing (and the tape

pointer is unchanged).

| pop the stack into the workspace. |

pop; |

| apply a 2 token grammar rule (a shift-reduction). |

pop;pop; "word*colon*" { clear; add 'command*'; push; } |

The pop command is usually employed in the parsing phase

of the script (not the lexing phase); that is, after the "parse>"

label. The "pop;" command is almost the inverse machine operation

of the "push;" command, but it is important to realise that

a command sequence of "pop;push;" does not always equivalent to

"nop;" (no-operation).

If the stream parser language is being used to parse and translate a

language then the script writer needs to ensure that each token ends with a

delimiter character (by default "*" ) in order for the push and pop commands

to work correctly.

The pop command also affects the "tape" of the virtual machine in

that the tape-pointer is automatically decremented by one once for

each pop command. This is convenient because it means that the

tape pointer will be pointing to the corresponding element for the

token. In other words in the context of parsing and compiling a

"formal language" the tape will be pointing to the

"value" or "attribute" for the the token which is currently in the

workspace.

PRINT COMMAND (↑)

The print command prints the contents of the workspace to

the standard output stream (the console, normally). This is

analogous to the the sed 'p' command (but its abbreviated form

is 't' not 'p' because 'p' means "pop" .

** Examples

| Print each character in the input stream twice: |

print; print; clear; |

| Replace all 'a' chars with 'A's; |

"a" { clear; add "A"; } print; clear; |

| Only print text within double quotes: |

'"' { until '"'; print; } clear; |

** Details

The print command does not take any arguments or parameters.

The print command is basically the way in which the parse-language

communicates with the outside world and the way in which it

generates an output stream. The print command does not change

the state of the pep virtual machine in any way.

Unlike sed, the parse-language does not do any "default" printing.

That is, if the print command is not explicitly specified the

script will not print anything and will silently exit as if it

had no purpose. This should be compared with the default

behavior of sed which will print each line of the input stream if

the script writer does not specify otherwise (using the -n switch).

Actually the print command is not to be so extensively used as

in sed. This is because if an input stream is successfully parsed

by a given script then only -one- print statement will be necessary,

at the end of the input stream. However the print command could be

used to output progress or error messages or other things.

GET COMMAND (↑)

This command obtains the value in the current 'tape' cell

and adds it (appends it) to the end of the 'workspace' buffer.

The "get" command only affects the 'workspace' buffer of the virtual

machine.

| if the workspace has the "noun" token, get its value and print it. |

"noun*" { clear; get; print; clear; } |

PUT COMMAND (↑)

The put command places the entire contents of the workspace

into the current tape cell, overwriting any previous value

which that cell might have had. The command is written

| put the text "one" into the current tape cell and the next one. |

clear; add "one"; put; ++; put; |

The put command only affects the current tape cell of the virtual

machine.

After a put command the workspace buffer is -unchanged- . This contrasts

with the machine stack 'push' command which pushes a certain amount of text

(one token) from the workspace onto the stack and deletes the same amount of

text from the workspace buffer.

The put command is the counterpart of the 'get' command which retrieves or

gets the contents of the current item of the tape data structure. Since the

tape is generally designed for storing the values or the attributes of parse

tokens, the put command is essentiallly designed to store values of

attributes. However, the put command overwrites the contents of the

current tape cell, whereas the "get" command appends the contents of

the current tape cell to the work space.

The put command can be used in conjunction with the 'increment' ++ and

'decrement' -- commands to store values in the tape which are not the current

tape item.

SWAP COMMAND (↑)

Syntax: swap;

Abbreviation: 'x'

Swaps the contents of the current tape cell with the workspace buffer.

| prepend the current tape cell to the workspace buffer |

swap; get; |

READ COMMAND (↑)

The read command reads one character from the input stream and places that

character in the 'peep' buffer. The character which -was- in the peep

buffer is added to the -end- of the 'workspace' buffer.

The read command is the fundamental mechanism by which the input stream is

"tokenized" also known as "lexical analysis" . The commands which also

perform tokenization are "until" , "while" and "whilenot" . These commands

perform implicit read operations.

There is no implicit read command at the beginning of a script

(unlike "sed" ), so all scripts will probably need at least one

read command.

REPLACE COMMAND (↑)

This command replaces one piece of text with another in the workspace

The replace command is useful indenting blocks of text during formatting

operations. The replace command only replaces plain text, not

regular expressions

| replace the letter 'a' with 'A' in the workspace buffer |

replace "a" "A"; |

The replace command is often used for indenting generated code.

| indent a block of text |

clear; get; replace "\n" "\n "; put; clear; |

Replace can also be used to test if the workspace contains a particular

character, in conjuntion with the "(==)" tape test.

| check if the workspace contains an 'x' |

# fragment

put; replace 'x' '';

(==) {

clear; add "no 'x'"; print; clear;

}

|

UNTIL COMMAND (↑)

Reads the input stream until the workspace ends with the given

text.

| print any text that occurs between '<' and '>' characters |

/</ { until ">"; print; } clear; |

| print only text between quote characters (excluding the quotes) |

r; /"/ { until '"'; clip; clop; print; } clear; |

| create a parse token 'quoted' from quoted text |

r; "/" { until '"'; clip; clop; put; add 'quoted*'; push; } clear; |

| print quoted text, reading past escaped quotes (\") |

/"/ { until '"'; print; } clear; |

The 'while' and 'whilenot' commands are similar to the until

command but they depend on the value of the 'peep' virtual

machine buffer (which is a single-character buffer) rather than

on the contents of the 'workspace' buffer like the until command.

** notes

The 'until' command usually will form part of the 'lexing' phase

of a script. That is, the until command permits the script to

turn text patterns into 'tokens'. While in traditional parsing tools

(such as Lex and Yacc) the lexing and parsing phases are carried out

by seperate tools, with the 'pep' tool the two functions are combined.

The until command essentially performs

multiple read operations or commands and after each read checks to see

whether the workspace meets the criteria specified in the argument.

WHILENOT COMMAND (↑)

It reads into the workspace characters from the input stream -while- the

'peep' buffer is -not- a certain character. This is a "tokenizing" command

and allows the input stream to be parsed up to a certain character without

reading that character.

The whilenot command does not exit if it reaches the end of the

input-stream (unlike 'read').

| print one word per line |

r; [:space:] { d; } whilenot [:space:]; add "\n"; print; clear; |

(there seems to be a bug in pep with whilenot "x" syntax)

| whilenot can also take a single character quote argument |

r; whilenot [z]; add "\n"; print; clear; |

| another way to print one word per line |

r; [ ] { while [ ]; clear; add "\n"; } print; clear; |

The advantage of the first example is that it allows the

script to tokenise the input stream into words

WHILE COMMAND (↑)

The 'while' command in the pattern-parse language reads the

input stream while the 'peep' buffer is any one of the

characters or character sets mentioned in the argument.

The command is written

The command takes one argument. This argument may also

include character classes as well as literal characters.

From example,

reads the input stream while the peep buffer is a digit.

The read characters are appended to the 'workspace' buffer.

The while command cannot take a quoted argument ("xxx").

Negation for the while command is currently supported

using the "whilenot" command.

ENDSWITH TEST (↑)

The 'ends with' test checks whether the workspace ends

with a given string. This test is written

The script language contains a structure to perform a

test based on the content of the workspace and to

execute commands depending on the result of that test.

An example of the syntax is

"ocean" { add " blue"; print; } |

In the script above, if the workspace buffer is the text "ocean" then the

commands within the braces are executed and if not, then not. The test

structure is a simple string equivalence test, there are -no- regular

expressions and the workspace buffer must be -exactly- the text which is

written between the // characters or else the test will fail, or return

false, and the commands within the braces will not be executed.

This command is clearly influenced by the sed

stream editor command which has a virtually identical syntax

except for some key elements. In sed regular expressions are

supported and in sed the first opening brace must be on the

same line as the test structure.

There is also another test structure in the script language

which checks to see if the workspace buffer -begins- with the

given text and the syntax looks like this

B"ocean" { add ' blue'; print; } |

the List test (not implemented as of july 2020, and I

dont think I will implement it)

A possible future "list" test could be written as

The list test determines if the workspace matches any one

of a list of strings which are contained within a text

file. In the example given above the name of the text file

is "nouns.txt" . If the "workspace;" was "dog" at the time

that the test was executed and the string "dog" was

contained in the text file "nouns.txt" , then the test would

return -true- and the instructions within the braces would

be executed. However if the word "dog" was not contained

in the text file then the test would return false, and

the instructions within the braces would -not- be

executed.

USING TESTS IN THE PEP TOOL (↑)

TEST EXAMPLES (↑)

| if the workspace is not empty, add a dot to the end of the workspace |

!"" { add '.'; } |

| if the end of the input stream is reached print the message "end of file" |

<eof> { add "end of file"; print; } |

| if the workspace begins with 't' trim a character from the end |

B"t" { clip; } |

TAPE TEST (↑)

The tape test determines if the current element of the tape structure is the

same as the workspace buffer.

The tape test is written

This test was included originally in order to parse the sed structure

s@old@new@g or s/old/new/g or s%old%new%g |

In other words, in sed, any character can be used to delimite the

text in a substitue command.

ACCUMULATOR (↑)

STACK STRUCTURE IN THE VIRTUAL MACHINE (↑)

The 'virtual'-machine of the pep language contains

a stack structure which is primarily intended to hold

the parse tokens during the parsing and transformation

of a text pattern or language. However, the stack

could hold any other string data. Each element of the

stack structure is a string buffer of unlimited size.

The stack is manipulated using the pop and push commands.

When a value is popped off the stack, that value is appended

to the -front- of the workspace buffer. If the stack is

empty, then the pop command has no effect.

TAPE IN THE PEP MACHINE (↑)

The tape structure in the virtual machine is an infinite

array of elements. Each of these elements is a string buffer

of infinite size. The elements of the tape structure may be

accessed using the @increment , @decrement , @get and @put

commands.

TAPE AND THE STACK (↑)

The tape structure in the virtual machine and the @stack

structure and designed to be used in tandem, and several

mechanisms have been provided to enable this. For example,

when a "pop" operation is performed, the @tape-pointer is

automatically decremented, and when a @push operation is

performed then the tape pointer is automatically incremented.

Since the parsing language and machine have been designed

to carry out parsing and transformation operations on

text streams, the tape and stack are intended to hold

the values and tokens of the parsing process.

BACKUSNAUR FORM AND THE PATTERN PARSER (↑)

Backus-naur form is a way of expressing grammar rules for formal languages.

A variation of BNF is "EBNF" which modifies slightly the syntax of bnf.

Sometimes on this site I use (yet another) syntax for bnf or ebnf rules but

the idea is the same.

There is close relationship between the syntax of the 'pep' language

and a bnf grammar. For example

"word*colon*" { clear; add 'command*'; push } |

corresponds to the grammar rule

SELF REFERENTIALITY (↑)

The parse script language is a language which is designed to

parse/compile/translate languages. This means that it can

recognise/parse/compile, and translate itself. The script

books/pars/translate.c.pss is an example of this.

Another interesting application of this self-referentiality

is creating a new compiling system in a different target language.

REFLECTION SELF HOSTING AND SELF PARSING (↑)

The script "compile.pss" is a parse-script which implements the

parse-script language. This was achieved by first writing a hand-coded

"assembler" program for the machine (contained in the file "asm.pp" ).

Once a working asm.pp program implemented a basic syntax for the language,

the compile.pss script was written. This makes it possible to maintain and

add new syntax to the language using the language itself.

A new "asm.pp" file is generated by running

pep -f compile.pss compile.pss >> asm.new.pp; cp asm.new.pp asm.pp |

Finally it is necessary to comment out the 2 "print" commands near

the end of the "asm.pp" file which are labelled ":remove:"

The command above runs the script compile.pss and also uses compile.pss as

its text input stream. In this sense the system is "self-hosting" and

"self-parsing" . It is also a good idea to preserve the old copy of asm.pp

in case there are errors in the new compiler.

SED THE STREAM EDITOR (↑)

The concept of "cycles" is drawn directly from the sed

language or tool (sed is a unix utility). In the sed language

each statement in a sed script is executed once for each -line-

in a given input stream. In other words there is a kind of

implicit "loop" which goes around the sed script. This loop in some

fictional programming language might look like:

| pseudo |

while more input lines

do

sed script

loop

|

In the current parse-language the cycles are executed for each

-character- in the input stream (as opposed to line).

SHIFTING AND REDUCING (↑)

There is one complicating factor which is the concept of multiple

shift-reduces during the "shift-reduce parsing one cycle or the

interpreter. This concept has already been treated within the @flag command

documentation. Another tricky concept is grammar rule precedence, in other

words, which grammar rule shift-reduction should be applied first or with

greater predence. In terms of any concrete application the order of the

script statements determines precedence.

SHIFT REDUCTIONS ON THE STACK (↑)

Imagine we have a 'pep' script as follows:

pop;pop;

"command*command*",

"commandset*command*" {

clear; add 'commandset*'; push;

}

"word*colon*" {

clear; add 'command*'; push;

}

push; push;

|

This script corresponds directly to the (e)bnf grammar rules

commandset := command , command;

commandset := commandset , command;

command := word , colon;

|

But in the script above there is a problem; that the first rule

needs to be applied after the second rule.

But it seems now that another reduction should occur namely

if-block --> if-statement block |

which can be simply implemented in the language using the

statements:

pop; pop;

"if-statements*block*" { clear; add "if-block*"; push; }

|

But the crucial question is, what happens if the statements just written

come before the statements which were presented earlier on? The problem is

that the second reduction will not occur because the script has already

passed the relevant statements. This problem is solved by the

.reparse command and the parse> label

PARSE TOKENS (↑)

LITERAL TOKENS (↑)

One trick in the parse script language is to use a "terminal"

character as its own parse-token. This simplifies the lexing phase

of the script. The procedure is just to read the terminal symbol

(one or more characters) and then add the token delimiter character

on the end.

| using literal tokens with the default token delimiter '*' |

read;

"{","}","(",")","=" {

put; add "*"; push; .reparse

}

|

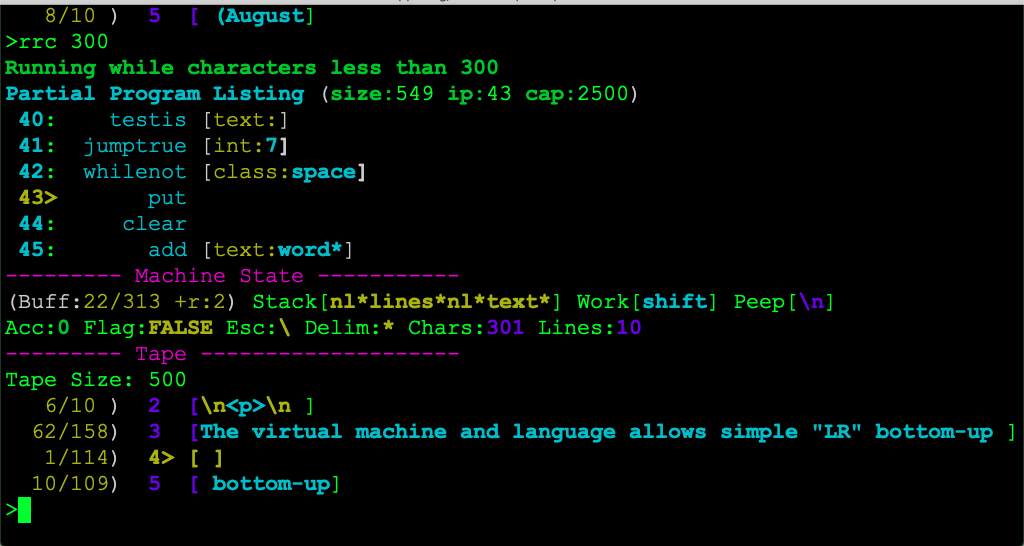

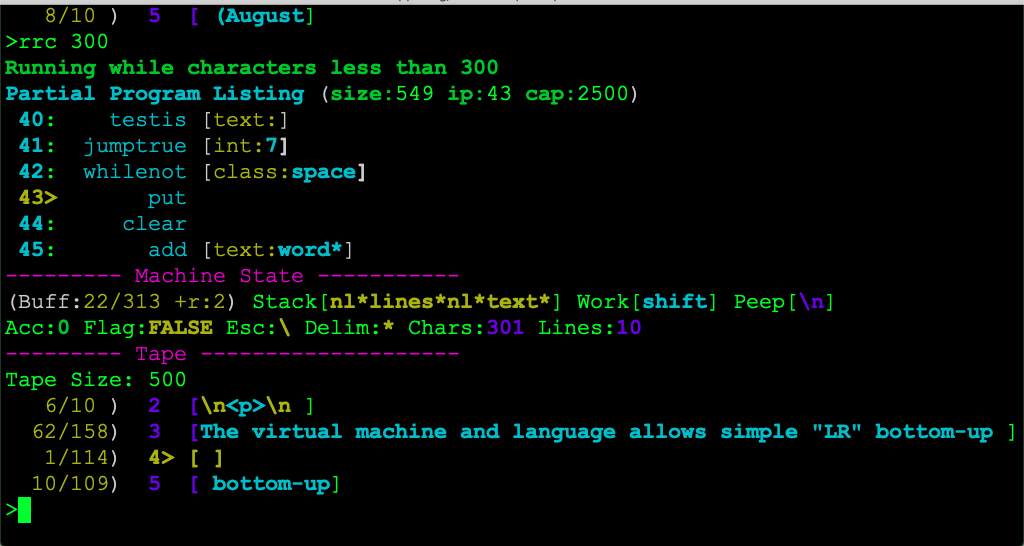

PURPOSE OF THE LANGUAGE AND VIRTUAL MACHINE (↑)

The language is designed to allow the simple creation of parsers and

translators, without the necessity to become involved in the complexities of

something like Lex or Yacc. Since the interpreter for the language is based

on a virtual machine, the language is platform independant and has a level

of abstraction.

The language combines the features of a tokenizer and parser and

translator.

AVAILABLE IMPLEMENTATIONS (↑)

http://bumble.sourceforge.net/books/pars/object/

This folder contains a working implementation in the c language.

The code can be compiled with the bash functions in the file

"helpers.gh.sh"

http://bumble.sourceforge.net/books/pars/object.js

An javascript object that can be used with the pep tool and

the script "translate.javascript.pss" to translate scripts into

javascript.

http://bumble.sourceforge.net/books/pars/translate.java.pss

COMPARISON BETWEEN PEP AND SED (↑)

The pep tool was largely inspired by the "sed" stream editor. Sed is a program

designed to find and replace patterns in text files. The patterns which Sed

replaces are "regular expressions"

SIMILARITIES (↑)

The workspace:

Both sed and the pep machine have a 'workspace' buffer (which in sed is

called the "pattern space" ). This workspace is the area where

manipulations of the text input stream are carried out. The script cycle:

The languages are based on an implicit cycle. That is to say

that each command in a sed or a pep script is executed once

for each line (sed) or once for each character (pp) Syntax:

The syntax of sed and pep are similar. Statement blocks are

surrounded by curly braces {} and statements are terminated with

a semi-colon ; .Also the sed idea of "tests" based on the contents

of the "pattern space" (or @workspace ) is used in the pep

language. Text streams:

Both sed and pep are text stream based utilities, like many

other unix tools. This means that both sed and pep consume an

input text stream and produce as output an output text stream.

These streams are directed to the programs using "pipes" | in a

console window on both unix and windows systems. For example, for

sed we could write in a console window

echo abcabcabc | sed "s/b/B/g" |

and the output would be

In the case of "pep" , the command line could be written

echo abcabcabc | pep -e "'b'{d;add'B'} print;d;" |

and the output, once again will be

-

DIFFERENCES (↑)

Lines vs characters:

Sed (like AWK) is a "line" based system whereas pep is a character

based script language. This means that the sed script is

executed once for each line of the input text stream, but in the

case of pep, the script is executed once for each character

of the input text stream. Strict syntax:

In some respects, the syntax of the pep language is stricter than

that of sed. For example, in a pep script all commands must end with a

semi-colon (except .reparse, parse> and .restart - which affect program

flow) and all statements after a test must be enclosed in curly braces.

In sed, it is not always necessary to terminate commands with a

semi-colon. For example, both of the following are valid sed statements.

White space:

Pep is more flexible with the placement of whitespace in

scripts. For example, in pep one can write

That is, the opening brace is on a different line to the

test. This would not be a legal syntax in most versions of sed. Command names:

Sed uses single character "memnonics" for its commands. For example,

"p" is print, "s" is substitute, "d" is delete. In the pep

language, in contrast, commands also have a long name, such as

"print" for print the workspace, "clear" for clear the workspace, or

"pop" to pop the last element of the stack onto the workspace.

While the sed approach is useful for writing very short, terse

scripts, the readability of the scripts is not good. Pep allows

for improved readability, as well as terseness if required. The virtual machines:

While both sed and pep are in a sense baed on

"virtual machines" , the machine for pep is more

extensive. The sed machine essential has 2 buffers, the pattern space,

and the hold space. The pep @virtual-machine ,however has a

workspace, a stack, a tape, an counter variable amongst other things. Regular expressions:

The tests or "ranges" in sed, as well as the substitutions are based

on regular expressions. In pep, however, no regular expressions are

used. The reason for this difference is that pep is designed to

parse and transform a different set of patterns than sed. The patterns

that pep is designed to deal with are referred to formally as

"context free languages" Negation of tests:

The negation operator ! in the "pep" language is placed

before the test to which is applies, where as in sed the

negation operator comes after the test or range. So,

in pep it is correct to write

But in sed the correct syntax is

implicit read and print

Sed implicitly reads one line of the input stream for

each cycle of the script. Pep does not do this, so

most scripts need an explicit "read" command at the

beginning of the script. For example

| a sed script with an implicit line read |

s/a/A/g; |

| pep has no implicit character read or print |

r; replace "a" "A"; print; clear; |

BACKUS-NAUR FORM AND THE PATTERN PARSER

Backus-naur form is a way of expressing grammar rules for formal languages.

A variation of BNF is "EBNF" which modifies slightly the syntax of bnf.

There are many versions of bnf and ebnf

There is close relationship between the syntax of the 'pep' language

and a bnf grammar. For example:

"word*colon*" { clear; add "command*"; push } |

corresponds to the backus-naur form grammar rule

PEP LANGUAGE PARSING ITSELF (↑)

One interesting challenge for the pep language is to "generate itself"

from a set of bnf rules. In other words given rules such as

command := word semicolon;

command := word quotedtext semicolon;

|

then it should be possible to write a script in the language

which generates the output as follows

pop;pop;

"word*semicolon*" {

clear; add "command*"; push; .reparse

}

pop;

"word*quotedtext*semicolon*" {

clear; add "command*"; push; .reparse

}

push;push;push;

|

When I first thought of a virtual machine for parsing languages,

I thought that it would be interesting and important to build

a more expressive language "on top of" the pep commands. However,

that now seems less important.

TOKEN ATTRIBUTE TRANSFORMATIONS (↑)

We can write complete translators/compilers with the script language.

However it may be nice to have a more expressive format like the one shown

below.

| a more expressive compiling language built on top of the parse language |

command := word semicolon {

$0 = "<em>" $1 "</em"> $2;

};

...

The $n structure will fetch the tape-cell for the corresponding

identifier in the bnf rule at the start of the brace block.

This would be "compiled" to pep syntax as

----

pop;pop;

"word*semicolon*" {

clear;

# assembling: $0 = "<em>" $1 "</em"> $2;

add "<em>"; get; add "</em>"; ++; get; --; put; clear;

# resolve new token

add "command*"; push; .reparse

}

push;push;

|

SHIFT REDUCTIONS ON THE STACK (↑)

Imagine we have a "recogniser" pep script as follows:

read;

";" { clear; add "semicolon*"; push; }

[a-z] {

while [a-z]; clear; add "word*"; push;

}

parse>

pop;pop;

"command*command*"

{ clear; add 'commandset*'; push; .reparse }

"word*semicolon*"

{ clear; add 'command*'; push; .reparse }

push; push;

(eof) {

pop; pop; push;

!"" {

clear; add "incorrect syntax! \n"; print; quit;

}

pop;

"command*", "commandset*" {

clear; add "Correct syntax! \n"; print; quit;

}

}

|

This script recognises a language which consists of a series

of "commands" (which are lower case words) terminated in semicolons.

At the end of the script, there should only be one token on the

stack (either command* or commandset*).

This script corresponds reasonable directly to the ebnf rules

word := [a-z]+;

semicolon := ';';

commandset := command command;

command := word semicolon;

|

But in the script above there is a problem; that the first rule

needs to be applied after the second rule.

The above statements in the pep language execute a reduction according to

the grammar rule written above. Examining the state of the machine

before and after the script statements above ....

==:: virtual machine

.. stack, if-statement, open-brace*, statements*, close-brace*

.. tape, if(a==b), {, a=1; b=2*a; ..., }

.. workspace,

..,

NEGATION OF TESTS (↑)

a test such as

"some-text" { #* commands *# } |

can be modified with the logic operator "!" (not) as in

!"some-text" { #* commands *# } |

Note that the negation operator '!' must come before the test which it

modifies, instead of afterwards as in sed.

Multiple negations are not allowed

!!"some-text" {...} # incorrect |

** Examples

| print only numbers in the input |

r; ![0-9] { clip; add " "; print; clear; } |

If the workspace does not begin with the text 'apple' then

print the workspace

r; E" " { !B"apple" { print; } clear; } |

If the workspace does not match any of the lines of

the file verbs.txt then add the text 'noun' to

the workspace

!=verbs.txt= { add 'noun'; } |

If the end of the input stream has not been reached

then push the contents of the workspace onto the

stack

If the value of the workspace is exactly equal to the

value of the current element of the tape, then exit

the script immediately

COMMENTS IN THE PARSER LANGUAGE (↑)

Both single line, and multiline comments are available in the

parser script language as implemented in books/pars/asm.pp and

compile.pss

| single and muliline examples |

#* check if the workspace

is the text "drib" *#

"drib" {

clear; # clear the workspace buffer

}

|

The script books/pars/compile.pss attempts to preserve comments

in the output "assembler" code to make that code more readable.

GRAMMAR AND SCRIPT CONSTRUCTION (↑)

My knowledge of formal grammar theory is quite limited. I am more

interested in practical techniques. But there is a reasonably close

correlation between bnf-type grammar rules and script construction.

The right-hand-side of a (E)BNF grammar rule is represented by the

quoted text before a brace block, and the left-hand-side

correlates to the new token pushed onto the stack.

| the rule " ::= ;" in a parse script |

"article*noun*" { clear; add "nounphrase*"; push; } |

TRICKS (↑)

This section contains tips about how to perform specific tasks

within the limitations of the parse machine (which does not have

regular expressions, nor any kind of arithmetic).

| check if accumulator is equal to 4 |

read; a+; put; clear; count;

"4" {

clear; add "4th char is '"; get; "'\n"; print; clear;

}

|

| check if the workspace matches the regex /[0-9]{3,}/ |

[0-9] { clip; clip; clip; !"" { #* <commands> *# } }

|

| see how long a word is (nochars still unimplemented) |

read;

![:space:] {

nochars; whilenot [:space:]; put; clear;

add "word length = "; chars; print; clear;

}

# if the workspace is not empty, it must be white-space

# just ignore it.

!"" { clear; }

|

The script below uses a trick of using the replace command

with the "tape equals workspace" test (==) to check if the

workspace contains a particular string.

| print only lines that contain the text 'puma' |

whilenot [\n];

put; replace "puma" ""; !(==) { clear; get; print; }

(eof) { quit; }

|

MULTIPLEXING TOKEN SEQUENCES (↑)

Sometimes it is useful to have a long list of token sequences

before a brace block. One way to reduce this list is as follows

| using nested tests to reduce token sequence lists |

pop; pop;

B"aa*","bb*","cc*" {

E"xx*","yy*","zz*" {

# process tokens here.

nop;

}

}

# equivalent long token sequence list

"aa*xx*","aa*yy*","aa*zz*","bb*xx*","bb*yy*","bb*zz*"

"cc*xx*","cc*yy*","cc*zz*" {

nop;

}

|

NOTES FOR A REGEX PARSER (↑)

| parse a regex between / and / |

#*

tokens for the regex parser

class: [^-a\][bc1-5+*()]

spec: the list and ranges in [] classes

char: one character

*#

begin { while [:space:]; clear; }

read;

!"/" {

clear; add "error"; print; quit;

}

# special characters for regex can be literal tokens

[-/\]()+*$^.?] {

"*" { put; clear; add "star*"; push; .reparse }

add '*'; push; .reparse

}

# the start of class tests [^ ... ]

"[" {

read;

# empty class [] is an error

"]" {

clear; add "Empty class test [] at char "; chars;

print; quit;

}

# negated class test

"^" { clear; add "[neg*"; push; .reparse }

# not negated

clear; add "[*char*"; push; push; .reparse

}

# just get the next char after the escape char

"\\ " {

(eof) { clear; add "error!"; print; quit; }

clear; read;

[ntfr] {

"n" { clear; add "\n"; }

"t" { clear; add "\t"; }

"f" { clear; add "\f"; }

"r" { clear; add "\r"; }

put; clear; add "char*"; push;

}

}

!"" { put; add "char*"; push; .reparse }

parse>

pop; pop;

"char*star*" { clear; add "pattern*"; .reparse }

"char*char*" { clear; add "pattern*char*"; push; push; .reparse }

"[*]*" { clear; add "error!"; print; quit; }

# 3 tokens

pop;

# parsing class tests eg: [ab0-9A-C^&*] or [^-abc]

# so the only special characters in classes are []^(negation)

# - indicates a range, except when it is the 1st char in the brackets

#

# sequences like: [*char*char* [*-*char*

B"[*",B"[neg*" {

# in brackets, a-b is a range

!E"]*".!E"-*" {

# manip attributes

clear; add "[*spec*char*"; push; push; push; .reparse

}

}

# token sequences, eg: spec/char/? spec/+/+ spec/./char

B"spec*" {

!E"]*".!E"-*" {

# manip attributes

get; ++; get; --; put;

clear; add "spec*char*"; push; push; .reparse

}

}

"[*spec*]*","[*char*]*" {

++; get; --; put;

clear; add "class*"; push; .reparse

}

"[neg*spec*]*","[neg*char*]*" {

++; get; --; put;

clear; add "negclass*"; push; .reparse

}

# end of class parsing

# a pattern can be simple, like 'a*' or complex like

# '[1-9abc\n]+' also a pattern can be a sequence of

# patterns

"pattern*pattern*" {

# this is a recogniser or checker. so just copy the patterns

# across.

get; ++; get; --; put;

clear; add "pattern*"; push; .reparse

}

push; push;

(eof) {

add "Parse stack is: "; print; clear; unstack; print; quit;

}

|

COMPILATION TECHNIQUES (↑)

One of the enjoyable aspects of this parsing/compiling machine is

discovering interesting practical "heuristic" techniques for

compiling syntactical structures, within the limitations of the

machine capabilities.

This section details some of these techniques as I discover them.

RULE ORDER (↑)

In a script, after the parse> label we can parse rules in the

order of the number of tokens. Or we can group the rules by

token. There are some traps, for example: "pop; pop;" doesnt

guarantee that there are 2 tokens in the workspace.

RECOGNISERS AND CHECKERS (↑)

A recogniser is a parser that only determines if a given string is a valid

"word" in the given language. We can extend a recogniser to be an error

checker for a given string, so that it determines at what point (character

or line number) in the string, the error occurs. The error-checker can also

give a probably reason for the error (such as the missing or excessive

syntactic element) This is much more practically useful than a recogniser examples with error messages

...

EMPTY START TOKEN (↑)

When the start symbol is an array of another token, it may often

simplify parsing to create an empty start token in a "begin" block

ebnf: text = word*

| using an empty start token |

begin { add "text*"; push; }

read;

# ignore whitespace

[:space:] { while [:space:]; clear; }

!"" { whilenot [:space:]; put; clear; add "word*"; push; }

parse>

pop; pop;

"text*word*" { clear; add "text*"; push; .reparse }

(eof) {

# check for start symbol 'text* here

}

push; push;

|

Without the empty "text*" token we would have to write

"text*word*","word*word*" { <commands> } |

This is not such a great disadvantage, but it does lead to

inefficient compiled code, because the "word*word*" token

sequence only occurs once when running the script (at the

beginning of the input stream)

| compiled code for "text*word*","word*word*" { ... } |

|

Secondly, in other circumstances, there are other advantages

of the empty start symbol. See the pars/eg/history.pss script

for an example.

END OF STREAM TOKEN (↑)

This is an analogous technique to the "empty start symbol" . In

many cases it may simplify parsing to create a "dummy" end token

when the end-of-stream is reached. This token should be created

immediately after the parse> label

| example of use of "end" token to parse dates in text |

# tokens: day month year word

# rules:

# date = day month year

# date = day month word

# date = day month end

read;

![:space:] {

whilenot [:space:]; put; clear;

[0-9] {

# matches regex: /[0-9][0-9]*/

clip; clip; !"" {

}

add "word*"; push;

}

parse>

(eof) {

}

|

PALINDROMES (↑)

Palindromes are an interesting exercise for the machine because

they may be the simplest context-free language.

The script below is working but also prints single letters

as palindromes. See the note below for a solution.

| print only words that are palindromes |

read;

# the code in this block builds 2 buffers. One with

# the original word, and the other with the word in reverse

# Later, the code checks whether the 2 buffers contain the

# same text (a palindrome).

![:space:] {

# save the current character

++; ++; put; --; --;

get; put; clear;

# restore the current character

++; ++; get; --; --;

++; swap; get; put; clear; --;

}

# check for palindromes when a space or eof found

[:space:],<eof> {

# clear white space

[:space:] { while [:space:]; clear; }

# check if the previous word was a palindrome

get; ++;

# if the word is the same as its reverse and not empty

# then its a palindrome.

(==) {

# make sure that palindrome has > 2 characters

clip; clip;

!"" { clear; get; add "\n"; print; }

}

# clear the workspace and 1st two cells

clear; put; --; put;

}

|

LINE BY LINE TOKENISATION (↑)

See the example below and adapt

| a simple line tokenisation example |

read;

[\n] { clear; }

whilenot [\n]; put; clear;

add "line*"; push;

parse>

pop; pop;

"line*line*", "lines*line*" {

clear; get; add "\n"; ++; get; --; put; clear;

add "words*"; push; .reparse

}

push; push;

(eof) {

pop; "lines*" { clear; get; print; }

}

|

| remove all lines that contain in a particular text |

until "

|

WORD BY WORD TOKENISATION (↑)

A common task is to treat the input stream as a series of space

delimited words.

| a simple word tokenisation example, print one word per line |

read; [:space:] { clear; }

whilenot [:space:]; put; clear;

add "word*"; push;

parse>

pop; pop;

"word*word*", "words*word*" {

clear; get; add "\n"; ++; get; --; put; clear;

add "words*"; push; .reparse

}

push; push;

(eof) {

pop; "words*" { clear; get; print; }

}

|

REPETITIONS (↑)

The parse machine cannot directly encode rules which contain the ebnf

repetition construct {}. The trick below only creates a new list token if

the preceding token is not a list of the same type.

| a technique for building a list token from repeated items |

# ebnf rules:

# alist := a {a}

# blist := b {b}

read;

# terminal symbols

"a","b" { add "*"; push; }

!"" {

put; clear;

add "incorrect character '"; get; add "'";

add " at position "; chars; add "\n";

add " only a's and b's allowed. \n"; print; quit;

}

parse>

# 1 token (with extra token)

pop;

"a*" {

pop; !"a*".!"alist*a*" { push; }

clear; add "alist*"; push; .reparse

}

"b*" {

pop; !"b*".!"blist*b*" { push; }

clear; add "blist*"; push; .reparse

}

push;

<eof> {

unstack; put; clear; add "parse stack is: "; get;

print; quit;

}

|

PLZERO CONSTANT DECLARATIONS (↑)

An example of parsing a repeated element occurs in pl/0 constant

declarations. The rule in Wirth's ebnf syntax, is:

constdec = "const" ident "=" number {"," ident "=" number} ";" |

Examples of valid constant declarations are

const g = 300, h=2, height = 200;

const width=3;

|

It is necessary to factor the ebnf rule to remove the repetition

element (indicated by the braces {} ). In the script below, the

repeated element is factored into the "equality" and "equalityset"

parse tokens. The script below may seem very verbose compared to the

ebnf rule but it lexes and parses the input stream, recognises

keywords and punctuation and provides error messages.

| recognise pl/0 constant declarations |

begin {

add '

recognising pl/0 constant decs in the form:

"const g = 300, h=2, height = 200;"

"const width=3;"

\n'; print; clear;

}

read;

[:alpha:] {

while [:alpha:];

# keywords in pl/0

"const","var","if","then","while","do","begin","end" {

put; add "*"; push; .reparse

}

put; clear; add "ident*";

push; .reparse

}

[0-9] {

while [0-9]; put; clear; add "number*";

push; .reparse

}

# literal tokens

",", "=", ";" { add "*"; push; }

# ignore whitespace

[:space:] { clear; }

!"" {

add " << invalid character at position "; chars;

add ".\n"; print; quit;

}

parse>

pop; pop; pop;

"ident*=*number*" {

clear; add "equality*"; push; .reparse

}

"equality*,*equality*","equalityset*,*equality*" {

clear; add "equalityset*"; push; .reparse

}

"const*equality*;*", "const*equalityset*;*" {

clear; add "constdec*"; push;

}

push; push; push;

<eof> {

pop; pop;

"constdec*" {

clear; add " Valid PL/0 constant declaration!\n";

print; quit;

}

push; push;

add " Invalid PL/0 constant declaration!\n";

add " Parse stack: ";

print; clear; unstack; print;

quit;

}

|

OFFSIDE OR INDENT PARSING (↑)

Some languages use indentation to indicate blocks of code, or

compound statements. Python is an important example. These languages

are parsed using "indent" and "outdent" or "dedent" tokens.

The mark/go commands should allow parsing of indented languages.

completely untested and incomplete...

the idea is to issue "outdent" or "indent" tokens by

comparing the current leading space to a previous

space token. But the code below is a mess.

The tricky thing is that we can have multiple "outdent*"

tokens from one space* token eg

if g==x:

while g<100:

g++

g:=0;

|

| a basic indent parsing procedure |

# incomplete!!

read;

begin { mark "b"; add ""; ++; }

[\n] {

clear; while [ ]; put; mark "here"; go "b";

# indentation is equal so, do nothing

(==) { clear; go "here"; .reparse }

add " ";

(==) { clear; add "indent*"; push; go "here"; .reparse }

clip; clip;

clip; clip;

(==) { clear; add "outdent*"; push; go "here"; .reparse }

put; clear; add "lspace*"; push;

mark "b";

}

parse>

|

OPTIONALITY (↑)

The parse machine cannot directly encode the idea of an

optional "[...]" element in a bnf grammar.

| a rule with an optional element |

r := 'a' ['b'] . |

In some cases we can just factor out the optional into alternation "|"

However once we have more than 2 or 3 optional elements in a rule, this

becomes impractical, for example

r := ['a'] ['b'] ['c'] ['d'] . |

In order to factor out the optionality above we would end up with

a large number of rules which would make the parse script very verbose.

Another approach is to encode some state into a parse token.

| a strategy for parsing rules containing optional elements |

# parse the ebnf rule

# rule := ['a'] ['b'] ['c'] ['d'] ';' .

begin { add "0.rule*"; push; }

read;

[:space:] { clear; }

"a","b","c","d",";" { add "*"; push; .reparse }

!"" { add " unrecognised character."; print; quit; }

parse>

pop; pop;

E"rule*a*" {

B"0" { clear; add "1.rule*"; push; .reparse }

clear; add "misplaced 'a' \n"; print; quit;

}

E"rule*b*" {

B"0",B"1" { clear; add "2.rule*"; push; .reparse }

clear; add "misplaced 'b' \n"; print; quit;

}

E"rule*c*" {

B"0",B"1",B"2" { clear; add "3.rule*"; push; .reparse }

clear; add "misplaced 'c' \n"; print; quit;

}

E"rule*d*" {

B"0",B"1",B"2",B"3" { clear; add "4.rule*"; push; .reparse }

clear; add "misplaced 'd' \n"; print; quit;

}

E"rule*;*" { clear; add "rule*"; push; }

push; push;

(eof) {

pop;

"rule*" { add " its a rule!"; print; quit; }

}

|

REPETITION PARSING (↑)

Similar to the notes above about parsing grammar rules containing

optional elements, we have a difficulty when parsing elements or

tokens which are enclosed in a "repetition" structure. In ebnf

syntax this is usually represented with either braces "{...}" or

with a kleene star "*" .

We can use a similar technique to the one above to parse repeated

elements within a rule.

The rule parsed below is equivalent to the regular expression

So the script below acts as a recogniser for the above regular

expression. I wonder if it would be possible to write a script

that turns simple regular expressions into parse-scripts?

In the code below we dont have any separate blist clist tokens

| parsing repetitions within a grammar rule |

# parse the ebnf rule

# rule := ['a'] {'b'} {'c'} ['d'] ';' .

# equivalent regular expression: /a?b*c*d?;/

begin { add "0/rule*"; push; }

read;

[:space:] { clear; }

"a","b","c","d",";" { add "*"; push; .reparse }

!"" { add " unrecognised character."; print; quit; }

parse>

# ------------

# 1 token

pop;

# ------------

# 2 tokens

pop;

E"rule*a*" {

B"0" { clear; add "a/rule*"; push; .reparse }

clear; add "misplaced 'a' \n"; print; quit;

}

E"rule*b*" {

B"0",B"a",B"b" { clear; add "b/rule*"; push; .reparse }

unstack; add " << parse stack.\n";

add "misplaced 'b' \n"; print; quit;

}

E"rule*c*" {

B"0",B"a",B"b",B"c" { clear; add "c/rule*"; push; .reparse }

clear; add "misplaced 'c' \n";

unstack; add " << parse stack.\n"; print; quit;

}

E"rule*d*" {

B"0",B"a",B"b",B"c" { clear; add "d/rule*"; push; .reparse }

clear; add "misplaced 'd' \n"; print; quit;

}

E"rule*;*" { clear; add "rule*"; push; }

push; push;

(eof) {

pop;

"rule*" { add " its a rule!"; print; quit; }

unstack; add " << parse stack.\n"; print; quit;

}

|

PL ZERO (↑)

Pl/0 is a minimalistic language created by Niklaus Wirth, for

teaching compiler construction.

In this section, I will explore converting the pl/0 grammar

into a form that can be used by the parsing machine and

language. I will do this in stages, first parsing "expressions"

and then "conditions" etc.

Wirths grammar is designed to be used in a recursive descent

parser/compiler, so it will be interesting to see if it can be adapted for

the machine which is essentially a LR shift-reduce parser/compiler.

The grammar below seems to be adequate for LR parsing, except for

the expression tokens (expression, term, factor etc). Apart from

expression we should be able to factor out the various [] {} and ()

constructs and create a machine parse script.

| the pl/0 grammar in wsn/ebnf form |

program = block "." .

block = [ "const" ident "=" number {"," ident "=" number} ";"]

[ "var" ident {"," ident} ";"]

{ "procedure" ident ";" block ";" } statement .

statement = [ ident ":=" expression | "call" ident

| "?" ident | "!" expression

| "begin" statement {";" statement } "end"

| "if" condition "then" statement

| "while" condition "do" statement ].

condition = "odd" expression |

expression ("="|"#"|"<"|"<="|">"|">=") expression .

expression = [ "+"|"-"] term { ("+"|"-") term}.

term = factor {("*"|"/") factor}.

factor = ident | number | "(" expression ")".

|

PROGRAMS AND BLOCKS IN PLZERO (↑)

I will try to keeps wirths grammatical structure, but factor the

2 rules and introduce new parse tokens for readability, such

"constdec*" for a constant declaration, and "vardec* for a variable

declaration. I will also try to keep Wirth's rule names for reference

| the program and block grammar rules |

program = block "." .

block = [ "const" ident "=" number {"," ident "=" number} ";"]

[ "var" ident {"," ident} ";"]

{ "procedure" ident ";" block ";" } statement .

|

Once this script is written we can check if it is parsing

correctly, and then move on to variable declarations, proceedure

declarations and so forth (but not the forth language, which doesnt

require a grammar).

PLZERO EXPRESSIONS (↑)

| wsn/ebnf grammar for pl/0 expressions |

expression = [ "+"|"-"] term { ("+"|"-") term}.

term = factor {("*"|"/") factor}.

factor = ident | number | "(" expression ")".

|

TRIGGER RULES (↑)

',' orset '{' ::= ',' test '{' ; |

NEGATED CLASSES (↑)

Often when creating a language or data format, we want to

be able to negate operators or tests (so that the test has

the opposite effect to what it normally would). In the parse

script language I use the prefixed "!" character as the

negation operator.

We can create a whole series of negated "classes" or tokens in

the following way. So the "negation" logic is actually stored

on the stack, not on the tape. This is useful because we can

compile all these negated tokens in a similar way .

| example of creating a set of negated classes |

"!*quote*","!*class*","!*begintext*", "!*endtext*",

"!*eof*","!*tapetest*" {

replace "!*" "not"; push;

# now transfer the token value

get; --; put; ++; clear; .reparse

}

|

LOOKAHEAD AND REVERSE REDUCTIONS (↑)

Quotesets have been replaced in the current (aug 2019) implementation

of compile.pss with 'ortestset' and 'andtestset'. But the compilation

techniques are similar to those shown below.

The old implementation of the "quotesets" token in old versions of

compile.pss seems quite interesting. It waits until the stack contains a

brace token "{*" until it starts reducing the quoteset list.

| a quoteset, or a set of tests with OR logic |

'a','b','c','d' { nop; } |

so the compile.pss script actually parses "'c','d' { " first, and

then resolves the other quotes ('a','b'). This is good because

the script can work out the jump-target for the forward true jump.

(the accumulator is used to keep track of the forward true jump).

It also uses the brace as a lookahead, and then just pushes it

back on the stack, to be used later when parsing the whole brace block.

| bnf rules for parsing quotesets |

quoteset '{' := quote ',' quote '{' ; |

quoteset '{' := quote ',' quoteset '{' ; |

But this has 2 elements on the left-hand side. This works but is

not considered good grammar (?)

Multiple testset sequences may be used as a poor-womans

regular expression pattern matcher. test if the workspace begins with 'http://', ends with '.txt' and

| doesnt contain the letter 'x' |

B"http://", E".txt", ![x] {

add " (found text file link!) \n"; print; clear;

}

|

It doesnt really make sense to combine a text-equals test with

any other test, but the other combinations are useful.

RABBIT HOPS (↑)

The "set" token syntax parses a string such as

The comma is the equivalent of the alternation operator (|) in

bnf syntax.

In my first attempt to parse "quoteset" tokens with the "compile.pss"

script compiler, I used a rabbit hop technique. From an efficiency

and compiled code size point-of-view this is a very bad way to

compile the code, but it seems that it may be useful in other

situations. It provides a way to generate functional code when

the final jump-target is not know at "shift-reduce" time.

| create rabbit hops for the true jump |

"quote*,*quote*" {

clear; add "testis "; get;

# just jump over the next test

add "\njumptrue 3 \n"; ++; ++;

add "testis ";

get;

add "\n";

# add the next jumptrue when the next quote is found

--; --; put;

clear;

add "quoteset*";

push;

# always reparse/compile

.reparse

}

# quoteset ::= quoteset , quote ;

"quoteset*,*quote*" {

clear; get;

++; ++;

add "jumptrue 4 \n ";

add "jumptrue 3 \n ";

add "testis "; get;

add "\n";

# add the next jumptrue when/if the next quote is found

--; --;

put; clear;

add "quoteset*";

push;

# always reparse/compile

.reparse

}

|

ASSEMBLY FORMAT AND FILES (↑)

The implementation of the language uses an intermediary "assembly"

phase when loading scripts. asm.pp is responsible for converting

the script into an assembly (text) format. "asm.pp" is itself an

assembly file. These files consist of "instructions" on the virtual

machine, along with "parameters" , jumps, tests and labels, which make

writing assembly files much easier (instead of having to use line

numbers). So the asm.pp file actually implements the script language

The proof is in the pudding: this shows that the parse-machine is

capable of implementing "languages" (or at least simple ones).

It should not normally be necessary to write any assembler code,

since the script language is much more readable. However, it

is useful for debugging scripts to view the assembly listing as

it is loaded into the machine (the -I switch allows this).

The assembler file syntax is similar to other machine assemblers:

1 command per line, leading space is insignificant. Labels are

permitted and end in a ":" character.

| an example compilation of a basic script |

# read; "abc" { nop; }

start:

read

testis "abc"

jumpfalse block.end.21

nop

block.end.21:

jump start

|

| another compilation example |

# begin { whilenot [:space:]; clear; } read; [:space:] { d; }

# compilation:

whilenot [:space:]

clear

start:

read

testclass [:space:]

jumpfalse block.end.60

clear

block.end.60:

jump start

|

COMPARISON WITH OTHER COMPILER COMPILERS (↑)

As far as I am aware, all other compiler compiler systems take

some kind of a grammar as input, and produce source code as

output. The produced source code acts as a "recogniser" for

strings which conform to the given grammar.

YACC AND LEX COMPARISON (↑)

The tools "yacc" and "lex" and the very numerous clones, rewrites

and implementations of those tools are very popular in the implementation

of parsers and compilers. This section discusses some of the

important differences between the parse-machine and language and

those tools.

The pep language and machine is (deliberately) a much more limited system

than a "lex/yacc" combination. A lex/yacc-type system often produces "c"

language code or some other language code which is then compiled and run to

implement the parser/compiler.

The pep system, on the other hand, is a "text stream filter" ; it simply

transforms one text format into another. For this reason, it cannot

perform the complex programmatic "actions" that tools such as lex/yacc

bison or antlr can achieve.

While clearly more limited than a lex-yacc style system, in my opinion

the current machine has some advantages: It is much simpler and there should be much easier to understand It does not make use of shift-reduce tables. It should be possible to implement it on much smaller systems. Because it is a text-filter, it should be more accessible for

"playing around" or experimentation. Perhaps it lacks the

psychological barrier that a lex-yacc system has for a

non-specialist programmer. It may be simpler and faster to port the system to new platforms/

languages. All that is required is a parse-machine "object" in the

target language and a script similar to "translate.c.pss"

STATUS (↑)

As of july 2020, the interpreter and debugger written in c (i.e /books/pars/gh.c )

works well. However it is not Unicode "aware" . A number of interesting

and/or useful examples have been written using the "pep" script language

and are in the folder /books/pars/eg/

The scripts which are designed to compile pep scripts into other languages

(java, javascript, python etc) are at varying stages of incompleteness.

The translate.java.pss and translate.javascript.pss are currently the most

complete, but have not been thoroughly tested. The most comprehensive way to

test them is to run them on themselves, with, for example:

pep -f translate.java.pss translate.java.pss > Machine.java |

NAMING OF SYSTEM (↑)

The executable is called 'pep' standing for "Parse Engine for Patterns"

The folder is called /books/pars/

The source file is called gh.c for no particularly good reason.

Pep scripts are given a ".pss" file extension, and files in the

"assembler format have a " .pp" file extension.

The source files are split into .c files where each one corresponds to a

particular "object" (data structure) within the machine (eg tapecell, tape,

buffer with stack and workspace). Previously I have called this system

"chomski" after the linguist Noam Chomsky, but I don't like that name

anymore.

'pep' is is not an "evocative" name (unlike, for example, "lisp" ),

but it fits with standard short unix tool naming.

Another possible name for the system could be "nom" which is a

slight reference to "noam" and also an indo-european (?) root

for "name"

LIMITATIONS AND BUGS (↑)

- The main interpreter 'pep' (source /books/pars/object/gh.c) is

written using plain c byte characters. This seemed a big limitation, but

the scripts translate.xxx.pss may be a simple way to accomodate unicode

characters without rewriting the code in gh.c